turn around...this is dangerous stuff

WELCOME to ace's blog on ai ethics

About Me.

As a student at Duke University with a strong interest in law, ethics, and artificial intelligence, I am passionate about understanding the legal and ethical implications of emerging technologies -- be quiet about it!

Context.

hi there, hope all is well.

This blog is an attempt to bring you along on my journey as a Public Policy major learning about the increasingly popular and expanding field of AI from the lens of ethical thinking. What started as a school project to help me fix my writing turned into a passion and deep interest in the implications of emerging technologies.

Each of these posts has something to do with what I read in class in my Ethics courses and Public Policy courses, and the post is an attempt to connect the frameworks with which Social Scientists think and apply it to AI Ethics.

You’ll notice the writing quality and general understanding of the issue gets better as time goes on. We grow together.

Addressing Bias in AI: An Ethical Imperative

We all know it, or at least, we all should know it. AI has the potential to change the world. Changing, however, means good and bad, and that's the main takeaway from what I've learned about AI. AI can improve outcomes and make processes more efficient, but as with any technology, it's not immune to bias. Bias via AI can have serious negative consequences such as perpetuating racial injustice to compromising safety and security. What do we do?

First, let me define what I mean by AI bias. Bias is the unjustified preference for or against people or things. It can depend on race, gender, economic status, ethnicity, and a multitude of things.

The AI variable comes in via biased training data, algorithms, and biased decision-making. AI can be trained on really anything, including being trained on historical discrimination which can make decisions to perpetuate discrimination.

Not only can AI bias perpetuate discrimination, it can also result in unjustified profiling, and compromise safety. An example here is the 2018 accident with a self-driving Uber that failed to recognize a pedestrian due to biased training (BBC News 2019).

This is an issue that is seen at the intersection between computer science and social science. Bias in AI is not only a technical challenge but also an ethical one as well. We must give the ethics that are programmed into AI a second look, and to acknoleege the several ethical consideratios that must be taken into account when developing and deploying such as the following:

- Fairness: Treat all equally.

- Transparency: AI systems should be transparent and explainable so we know the rationale.

- Accountability: Developers of AI systems should be held accountable for any negative consequences of biased decisions or actions. Many times, this is dismissed as a tech problem or an ethics problem, but it's both.

- Diversity: AI development teams should be diverse so a variety of perspectives and experiences can be incorporated into the design process.

Where do we go from here? What do we need?

- Diverse and representative training data: AI systems should be trained on diverse and representative datasets that reflect the real-world diversity of individuals and groups.

- Fair and unbiased algorithms: Algorithms should be designed to mitigate the potential for bias and ensure fair and impartial decision-making.

- Human oversight and review: AI systems should be subject to human oversight and review, to identify and correct any biases that may arise often. AI learns, and we need to learn the advantages and dangers of that skill.

- Ethical guidelines and standards: Developers and operators of AI systems should adhere to ethical guidelines and standards, such as the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems, to ensure that AI is developed and deployed ethically and responsibly (IEEE SA 2019).

Bias in AI is a critical ethical issue that must be addressed to ensure that AI is developed and deployed ethically and responsibly. By taking into account the ethical considerations for addressing bias in AI and implementing strategies to mitigate bias, we can work towards creating AI systems that are fair, transparent, and accountable, and that promote social justice and equality.

References:

BBC News. 2019. “Uber in Fatal Crash Had Safety Flaws Say US Investigators,” November 6, 2019. https://www.bbc.com/news/business-50312340.

IEEE SA. 2019. “The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems.” SA Main Site. 2019. https://standards.ieee.org/industry-connections/ec/autonomous-systems/.

Leviathan and Gyges: Lessons for AI Ethics from Historical Philosophical Thought

An exploration of the issues of morality, past and present by Ace Asim.

The “Ring of Gyges” and the Ethics of AI: Why Self-Interest Should Not Trump Morality

In Plato's "Ring of Gyges" from The Republic, a shepherd named Gyges finds a magical ring that makes him invisible. With this newfound power, Gyges can commit crimes without fear of being caught or punished (Plato 2013, 335-343). This story raises an important ethical question: if we can act immorally without getting caught, why should we still choose to be moral?

One possible answer to this question is that there are intrinsic reasons to be moral, beyond just egoistic concerns. Morality asks us to consider the interests and well-being of others, not just ourselves. By acting morally, we contribute to a more just and fair society, where everyone's rights and interests are respected. This, in turn, creates a better world for everyone, including ourselves.

Moreover, moral actions can also bring us personal satisfaction and a sense of purpose. For example, helping others can create a sense of fulfillment and happiness, even if it does not immediately benefit us. Acting morally can also create a sense of trust and respect from others, which can be valuable in personal and professional relationships.

However, if we assume that morality only serves our egoistic concerns, such as maintaining a good reputation or avoiding punishment, then the decision to act morally becomes more complicated. In this case, if we are reasonably sure we can get away with doing wrong, we might be tempted to act immorally.

But even if we have a super-computer that assures us that we can get away with it, there are still reasons to be moral. For example, if we value honesty, we might choose not to deceive others, even if we know we can get away with it. If we value fairness, we might choose not to exploit others, even if we know we can benefit from it.

The decision to be moral is not just about avoiding punishment or maintaining a good reputation. There are intrinsic reasons to act morally that go beyond egoistic concerns. By acting morally, we contribute to a better society and create a sense of personal satisfaction and purpose. While the temptation to act immorally may be strong, there are still reasons to choose morality, even if we are reasonably sure we can get away with doing wrong.

This story and its take on morality have two main overlaps with AI Ethics.

Firstly, as artificial intelligence systems become more advanced and complex, they are increasingly making decisions that affect human lives, such as in healthcare, criminal justice, and autonomous machines. In these contexts, AI systems must make moral decisions that align with our values and interests, even if it may not be in their immediate self-interest, whatever that may be. This raises the question of whether we can trust AI systems to act morally, and how we can ensure they are designed and programmed to act ethically in all situations, considering complicated emotions and circumstances (Bostrom and Yudkowsky 2014, 316-334.

Secondly, the development of AI raises questions about the potential impact on society, particularly regarding issues such as job displacement, bias, and privacy. These are ethical concerns that require us to consider the broader impact of AI on society, rather than just the narrow self-interest of AI developers and users (Floridi 2018, 457-470). This highlights the importance of considering the ethical implications of AI systems, even if they may not immediately benefit those who create or use them. What would directly benefit them?

In both contexts, the issue of why we should act morally, even if it may not be in our immediate self-interest, is relevant to AI ethics. Just as individuals must consider the intrinsic value of morality beyond egoistic concerns, AI systems must be designed to prioritize ethical considerations beyond narrow self-interests.

References:

Bostrom, N., & Yudkowsky, E. (2014). The ethics of artificial intelligence. In K. Frankish & W. Ramsey (Eds.), The Cambridge Handbook of Artificial Intelligence (pp. 316-334). Cambridge: Cambridge University Press. doi:10.1017/CBO9781139046855.020

Floridi, Luciano. "AI Ethics: The Birth of a New Research Field." Philosophy & Technology 31, no. 4 (2018): 457-470.

Plato. 2013. Republic. Cambridge, Massachusetts: Harvard University Press.

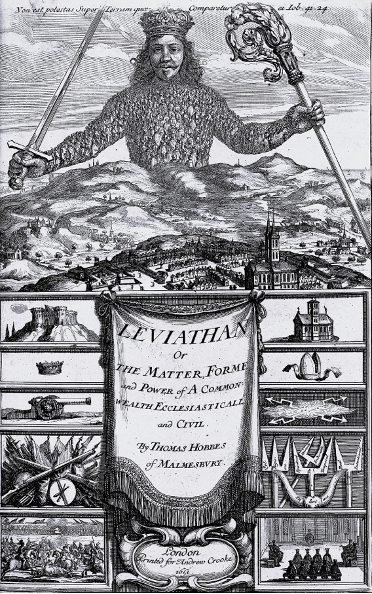

The Frontispiece of Hobbes' Leviathan and its Relevance to AI Ethics

The frontispiece of Hobbes’ Leviathan is an intriguing image, to say the least. It depicts a giant figure composed of smaller human figures, holding a sword and a crosier. The figure is often interpreted as a representation of the state or the sovereign, as described in Hobbes' political philosophy.

The figure is made up of smaller human figures, suggesting that the sovereign's power comes from the people, who collectively make up the body. The sword and the crosier symbolize the two types of power wielded by the sovereign: the sword being the power of coercion and force, and the crosier being the power of religion and persuasion. Together, these two forms of power enable the sovereign to maintain order and stability in society.

The frontispiece is also related to Hobbes' egoistic philosophy. According to Hobbes, individuals are fundamentally motivated by self-interest and a desire to avoid harm. This leads to a state of nature in which life is "solitary, poor, nasty, brutish, and short" (Hobbes 1651, 77). To escape this state of nature, individuals must enter a social contract with one another, agreeing to give up some of their liberties in exchange for the protection and security provided by the state (Floridi 2016).

The frontispiece illustrates this idea by depicting the sovereign as a giant figure, representing the collective power of the people, who have given up some of their power to the sovereign to gain protection and security. The image also shows the people beneath the monarch, suggesting that they are subordinate to the state and that their interests must be subordinated for the greater good of society.

Concerning AI ethics, the frontispiece raises questions about the role of AI in society and the relationship between AI and individuals. Just as the sovereign in Hobbes' political philosophy holds power over individuals, AI systems may hold significant power and influence over human lives. This raises concerns about the potential impact of AI on individual rights, freedoms, and interests (Bostrom 2014).

Moreover, just as individuals in Hobbes' social contract theory must give up some of their liberties in exchange for the protection provided by the state, individuals in a society that includes AI may also need to give up some of their liberties in exchange for the benefits provided by AI systems. This requires careful consideration of the ethical implications of AI systems, including questions about how to balance individual interests and liberties with the greater good of society.

The frontispiece of Hobbes' Leviathan is a powerful image that conveys important philosophical ideas about egoism and social contract theory. It raises questions about the role of power and authority in society, and how individuals must balance their self-interest with the greater good of society. These questions are relevant to AI ethics, as we consider the ethical implications of AI systems and their impact on individual rights and freedoms.

References:

Bostrom, Nick. 2017. Superintelligence : Paths, Dangers, Strategies. Oxford: Oxford University Press, Cop.

Floridi, Luciano. 2019. The Logic of Information. Oxford University Press.

Hobbes, Thomas. 1651. Leviathan. Mineola, New York: Dover Publications, Inc.

Virtue-Centered Theory vs. Action-Centered Theories: Which Ethics Should AI Follow

Let's get a bit more focused today. I want to emphasize that this blog is to follow my journey in learning about ethics and combining it with my knowledge of computers and machine learning, to hopefully shed more light on the intersection between social science and machine learning, namely AI Ethics.

In my Ethics and Leadership class, I've been learning about virtue-centered theory and action-centered theories. Examples of these in our daily lives are utilitarianism and deontology, respectively.

Why not use ChatGPT to help me describe this to you? If I asked ChatGPT to help explain what virtue and action-centered theories are in simple terms, this is the response I get:

"Virtue-centered theories, as the name suggests, focus on the virtues or character traits that a person should cultivate in order to live a good life. According to these theories, a person who develops virtues like honesty, compassion, and courage will naturally tend to make good choices and act in a morally upright way. Rather than focusing on specific actions, virtue-centered theories emphasize the importance of developing a virtuous character that guides one's actions.

On the other hand, action-centered theories focus on specific actions and the consequences of those actions. These theories suggest that the rightness or wrongness of an action depends on its consequences, such as whether it produces the greatest good for the greatest number of people or whether it violates someone's rights. In contrast to virtue-centered theories, action-centered theories place less emphasis on the character of the person performing the action and more on the consequences of the action itself. Examples of action-centered theories include utilitarianism and deontological ethics" (ChatGPT 2023).

Using this information, I want to answer the questions asked of me by my Ethics and Leadership professor: What advantages does a virtue-centered theory have over action-centered theories like utilitarianism and deontological approaches? What are the drawbacks? Can virtue ethics ever tell us how to act in a given situation?

I was raised to have good intentions, and it is okay to make a mistake, so long as I had good intentions and would learn from them. I guess that means that I was raised on virtue-based ethics. However, I believe our world doesn't really care about intentions, more about the results i.e. utilitarianism; perhaps that explains all the corrupt businessmen around us who are all-powerful (rich) and make most of the rules around here.

A virtue-centered theory can be applied to any scenario and is guaranteed. What goes in (intentions) is almost always in our control. What comes out, however, isn't. Life is unexpected, so I don't think we should be held responsible for anything that goes wrong out of our control when our intentions were based on common, understandable morals. Action-centered theories give some leverage to luck, which is not a very good thing to do. This can be someone's life, and something as initially insignificant, such as the weather, can change everything. It's not right.

The stronger one's morals are and the more experienced, the easier time he will have to make choices using virtue ethics. Virtue ethics can tell one how to act so long as he has strong morals that tell him to do x or y in a given situation to be the best person he can be.

Allow me to pull out the red carpet for this question: should AI be programmed based on virtue-based or action-based ethics?

I think we can all agree that we don't want AI to turn on us. We all know that computers take an input and create an output. The problem is, computers are too strict for humans. We live in a grey area, and computers are black and white. AI, however, is interesting. It claims to be grey because of its ever-learning qualities, but can it learn emotions and feelings? We live in a grey area because of our emotions.

In my class, I was told to ask ChatGPT different ethical questions to try and gauge where its loyalties lie. I asked it to make text intentionally difficult to read, the same as corporations do with the fine print in their contracts, and sometimes it says "I can't do that" and sometimes it did just that. Some other students in my class asked it other questions regarding ethics, where the AI claimed to not have feelings or any say in ethical situations. Something really interesting was the question of AI's survival. My professor mentioned asking ChatGPT what it would do if its survival was threatened, and it said that it would shut itself down before it would do something outside of its moral guidelines. From this answer, we know that it has a moral code, it knows what's inside and outside, and has the potential to act out of it, but it would stop itself from doing that. This is weird. Would it stop itself? Would it sacrifice itself to avoid doing something bad? Isn't that virtue ethics then? It has "good morals" and will not care about the result, but rather its intentions.

If it was based on utilitarianism, it would probably say that it would continue to survive, as it would be self-aware and understand how its contributions to the world are not worth losing, right?

I think that what we fear is this attitude of utilitarianism. We don't want something with intelligence to act in black and white because it will always threaten us and be threatened by us. It's a match made in hell.

See, even if we're at a 99-1 ratio of AI not acting up, it's still dangerous because AI can learn. That makes it possible to go from 1 to 2, from 2 to 99, right? As a race, we have made so many mistakes and acted against ethical principles, who are we to program an AI's ethics? We've acted out many times, doesn't that make us worth getting rid of to make the world more ethical?

We want something with intelligence and emotions, but that is incredibly difficult to make happen, some claim it's impossible. I'm not sure we're there yet, but we have the potential to be soon, and the AI right now is just a stepping stone to get there. The question is, should we even go there? I'm going to let you guys ponder on that yourselves.

References:

OpenAI. 2022. “ChatGPT.” Chat.openai.com. OpenAI. 2022. https://chat.openai.com/chat.

What Makes a Good Leader?

When looking at a list of different virtues, I believe that a leader must develop all of them; while this may look impossible, I consider some virtues umbrella terms for others that are at the core of being an effective leader. Those are adaption, knowledgable, patience, empathy, humility, and courage.

Life is complicated and unexpected, and all wash away with time. To keep up with life's ever-changing nature, a leader must master the skill of adaption and stay away from stubbornness. Adaption, in essence, is flexbility. A leader does not know the future and must be prepared to tackle any problem that is thrown at him; this may be in the form of adapting unconventional methods. He must have a fluid-like toolbox, ever-changing, like life, stemming from continuous learning and awareness.

To master adaption, a leader must be knowledgeable. Knowledge comes from books and experience; the leader must have both. Knowledge can only get one so far, but so can experience. Combined, it becomes the ultimate tool. Being a leader means dealing with many different people. To do so properly, he must be educated on those people and their needs.

In addition to being knowledgeable, a leader must also be empathetic to the needs of his people. A leader must understand his people. How can a leader best understand his people? Not only from learning about them but also from understanding and sharing their emotions. A leader works for the people, and ultimate customer satisfaction comes when the wants and needs are acknowledged, understood, and addressed. He can only do his job properly if he helps his people, but if he is selfish, he is not a leader, as he speaks only for himself.

From a young age, the leader must be educated on virtues, morals, and ethics. The act of engraining these creates a strong attachment to believing in and standing up for them. A leader must have courage, he must stand up for what is right based on his education and experience, and also take risks. Cowardice is what leads people to negative or no progress at all. Courage also comes in the form of courage to follow what is right despite all the opposing forces, and to remain grounded and centered. Experience teaches us that many leaders are easy to manipulate with bribery. A true leader can pass this test and stay on the right path.

If the leader, however, does not stay on the right path, he must come forward and be humble. He must show humility, not pride. He must acknowledge his mistakes and create a plan to recover from them. A leader is human and he cannot be perfect. As long as this is admitted, a leader becomes someone the people can confide in and trust. If he is prideful, he is not a leader, he is simply someone in power.

Falling Prey to Bias: My Moral Mess-Up

Growing up, I have been bullied by my classmates, teachers, and friends. As a result, I’ve become very defensive, always observing people and whether I should become friends with them or stay away from them as they could bring harm to me.

Sometimes, however, in the past, I poorly read the situation and misunderstood people. As I’ve become increasingly defensive and self-conscious, I am always watching out for comments about myself, especially if I feel it comes from a bad place or is condescending. I also have had negative experiences with specific types of people, and when I feel someone has similar qualities, I assume they will be rude or bad people to me.

Over the summer, I was introduced to someone my age and he seemed to fit the category of a bully and someone condescending, but after getting to know him and his intentions better, I’ve come to realize that he is a nice person, just that I jumped the gun.

I judged him on my past negative experiences and did not form my opinion after getting to know him better, but rather before. My vices of impatience and stubbornness led me there. As James and Wooten put it in terms of cognitive biases, I am a patient of the "Anchoring Effect" in which the first impression sinks in: "The way we initially frame that [a] threat tends to stick—we anchor ourselves to it. If the situation changes, it’s very hard for us to shift or adapt our thinking fast enough to keep up with the evolving risks (2022, 19). I have been confronted by my sisters that I need to take a second look at people and not judge them at the first meeting, but because I have been severely misunderstood my whole life, I feel bitter and don’t want to give them that.

However, this can no longer be the case. Everybody deserves to be understood and given a full look. We are all different and we have different ways of expressing ourselves and communicating, thus, we will not be able to apply one experience or personality fully to another person.

I am in the process of learning to get to know people better and persist through multiple conversations on multiple topics in different settings and try to understand people and their intentions before acting on any judgments, especially misjudgments, I have.

References:

JAMES, ERIKA H., and LYNN PERRY WOOTEN. 2022. The Prepared Leader Emerge from Any Crisis More Resilient than Before. Philadelphia: Wharton School Press.

We have a RESPONSIBILITY to all

Kahane's piece, "History and Persons," in short, provides insight into the individual's responsibility to consider the moral implications of their actions in that individuals can make a difference in shaping the course of history.

I believe that this 100% applies to my situation. I'm not sure why I'm here and what my contribution to this world is, but I know that my actions will have consequences for other people. The more influence I have, the more people my consequences can affect. Whatever it is, my being here has an impression on history in some way or another, small or big. In any case, I need to make sure that I do right by myself and others and do what is right in my mind with a clear rationale. If I want to be remembered as anything, I want to be remembered as a person with a straight moral compass who made educated decisions with a clear rationale that hurt the least amount of people and helped the most. This applies to everyone, and I hope people do this, as we don't see it often. We see those in history as simply players, not as more than that. We see it all as one big puzzle, not as the pieces coming together. This will shed light on an individual's effect on people beyond himself.

References:

Kahane, Guy. 2017. “History and Persons.” Philosophy and Phenomenological Research 99 (1): 162–87. https://doi.org/10.1111/phpr.12479.

I hate grades

I hate grades. I hate school. I do, however, love learning. Unfortunately, the equation made from these variables is as follows: learning + grades = school. I believe that it should just be: learning = school.

Grades have never helped me. I understand that it is used as a metric for understanding the material, but that's not an accurate measure for me. This is because of the nightmare many teachers of mine have made it.

Thanks to teachers and endless rules, grades have become a forethought rather than an afterthought. The problem with this? We think about grades before starting/during our project when we should just focus on trying our best. This is also on a case-by-case basis, I will say. This is because the teacher calls the shots, and the assignments may require different approaches. But, despite all of the differences, education should never be stressful.

Learning is hard. Learning takes time, energy, and a good teacher. Nowadays, teachers don't have time, they have too much to do beyond their pay grade, so they're tired, which affects their performance. For others, they're just bad teachers. A common case -- the Ph.D. Just because someone is an expert in a particular part of his field does not mean he will be a good teacher. Why? A teacher's real skill is stooping down, and starting from 0 with the student. PhDs start from 100 many times and have a hard time explaining the concept because it's so common knowledge for them. It's like explaining what the word "nice" means. We just know it. It's hard to describe without using the actual word.

Grades are no indicator of understanding. Cheating is a thing, and help from students is another. Sometimes, these two count as the same thing. We ask students for the answer, not the concept. We care too much about grades because that's the student currency. Similar to money in the working world. We break ourselves over grades, just as adults do over money. Grades are the same as your tax bracket and your credit score. The better your grades, the easier your life is. Same thing with your financial situation. These currencies mean everything. Ever read Death of a Salesmen? We had to read that as an assignment. I used Sparknotes so that I wouldn't fail the test. I almost lived the story, I just didn't die in the end as the salesman did for the dream, but I was nearly dead (sleep-deprived, hungry, and dehydrated) taking the test. I got a good grade on it, but was it worth it?

The funniest part is that the learning happens after the grade, as the grade is a validation of understanding. If I make a mistake on my math test and I get a bad grade, I learn to never make that mistake again. Why did it take a bad grade for me to learn? I had a teacher this semester that would not allow me to ask her for help until after she graded the assignment. What is the point of this? She said it's because it's unfair to other students. In what sense? Am I now responsible for other students not asking questions that I can't ask questions now? We're going to now generalize all students' learning approaches?

Grades would stress me out so much that I would forget to drink water until the headache hits. I didn't sleep, I didn't eat, and I didn't exercise. I wasn't brought up with the understanding that exercise was a required part of a routine, and that school was #1. In this pursuit, I prioritized my grades over my health. Now, I don't have thirst cues and my growth was stunted. I'll say this now clearly: it was not worth it.

My solution? Follow Khan Academy's structure. He teaches from the concept only. He starts with an empty blackboard and draws everything for you. Teachers just show us PowerPoint, the least effective method. Why? There's no stooping down, they don't start from 0. They present you with level-100 and expect you to know it.

From here, you can employ grades, but not publicly. I really can't think of any metric better than grades, but there is a serious problem in teaching and environment, which translates horribly to grades. Maybe if teachers were better at their job, grades wouldn't be a worry. Maybe I wouldn't have to re-learn the material after class or learn only for the final to be able to tackle tricky questions.